Cogito Blog

Know How Voice Analysis Works & Why it’s Important

There have been significant advancements in voice analysis technology in recent years. Following decades of research, major speech-related technological challenges have been overcome, such as accurately recognizing text from speech and producing intelligible and natural-sounding computer voices. This progress is leading to a massive uptake in speech technology in both consumer and business applications.

The rapid recent advancements are in large part due to the success of machine learning using deep neural networks. The same pattern that has been effective in domains like image processing and natural language processing has held true in speech processing – i.e. give the model the rawest representation and introduce as few human assumptions as possible. In this post, I will refer to one of the many types of AI voice analysis: the time-frequency images known as spectrograms.

The Value of Spectrograms

In my academic past as a researcher in a phonetics laboratory, I was frequently exposed to spectrograms. These time-frequency representations begin with overlapping windows of audio, of which we applied Fourier analysis. This analysis decomposes the audio and identifies the relative energy of low-frequency (i.e. base) sounds compared to high-frequency (i.e. treble) sounds.

One exercise which we frequently utilized was “spectrogram reading.” Spectrograms provide a useful view of the speech production involved in a piece of audio. Phoneticians can easily tell the difference between voiced speech (i.e. vowels or voiced consonants) and unvoiced speech (i.e. unvoiced consonants, like “sh” and “f”) by the presence or absence of voice harmonics, which appear as parallel horizontal lines in the spectrogram. Speech scientists can easily decipher more nuanced differences in speech. For example, discerning between certain vowels (e.g., “ee” vs “ah”) can be accomplished by using the patterns of thick, dark lines to show the vocal tract resonance patterns known as “formants”. They can also discriminate different unvoiced consonants (e.g., “sh” vs “f”) based on the distribution of noise which is due to the differences in aspiration made during speech production. Automatic speech recognition systems take advantage of the speech production evidence present in spectrograms and modern approaches take this raw input and can produce accurate estimates of what was said from it.

Visualizing Voice Quality

But it is not just “what” is said that is evident in spectrograms. Speech timing, voice quality and tone-of-voice (collectively known as “prosody”) can also be interpreted from these images. Some of the recent research from the speech synthesis group at Amazon Alexa took advantage of the spectrogram differences of neutral vs whispered speech, to enable Alexa to optionally take on a whispered voice quality.

Our research at Cogito has looked at other dimensions of voice quality variation in the context of spectrograms and the relevance of this to emotion. Although emotion is an internal cognitive state which is not directly observable, the presence of strong forms of emotion often has a significant impact on our behavioral patterns. During call center conversations, voice is usually the only medium through which the customer can communicate and, as a result, particular emotional states can have a big impact on the person’s speech production. For this reason, call center voice analysis software can provide unparalleled insight into customer service interactions and inform strategy decisions.

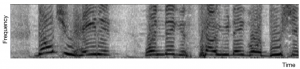

If you take a look at the figure below, you can see a spectrogram representation of a short snippet of speech audio where the speaker was extremely exercised and upset about the issue he was speaking about. This heightened emotional state quite clearly affected his speech production as you can observe the harmonics (horizontal parallel lines) dynamically moving up and down, reflecting rapidly changing intonation patterns. Additionally, the darkness of the harmonics, particularly in the higher frequencies, points to tenser overall voice quality.

Figure 1: Spectrogram of audio containing high emotional activation speech

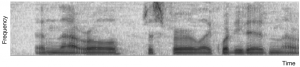

In contrast, the figure below shows a spectrogram for a softer, calmer voice, indicated by a noisier image with far less intensity, particularly in the higher frequencies. This visual comparison highlights the differences in speech production caused by different emotional states.

Figure 2: Spectrogram of audio containing low emotional activation speech

Figure 2: Spectrogram of audio containing low emotional activation speech

At Cogito, we use deep neural networks which are well suited to identify these differences and can be extremely effective at using this to classify and discriminate different classes and dimensions of emotion. These machine learning voice technologies allow for both agent and voice of customer sentiment analysis. Having this deep understanding of the impact of emotion on vocal patterns helps Cogito understand the “behavioral dance” between two people during a conversation.

Interested in learning more about Cogito’s voice analysis solution? Tour our product or request a demo.